Tackling the DeepFake Detection Challenge

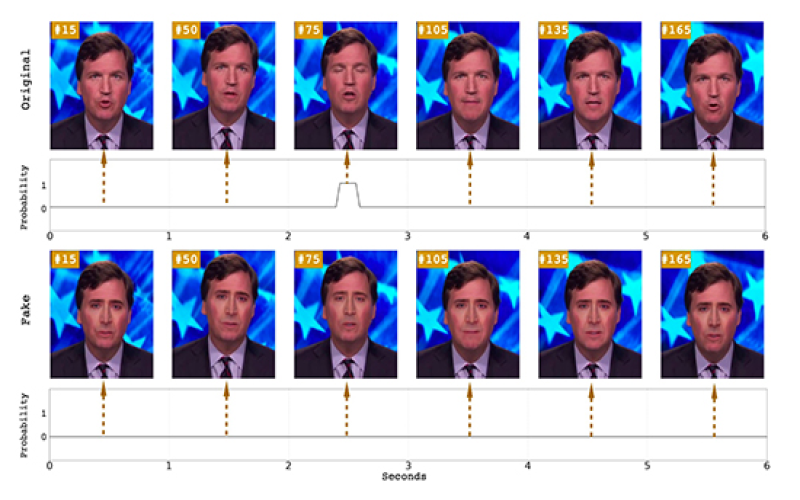

Professor of Computer Science Siwei Lyu is leading research efforts to use eye-blinking on original video (top) and a DeepFake video (bottom) to determine if a video was counterfeit. Lyu is working with Facebook to improve detection efforts. (Graphic by Yuezun Li, Ming-Ching Chang and Siwei Lyu).

ALBANY, N.Y. (Sept. 20, 2019) — In the world of artificial intelligence, the computer learning process known as DeepFake has become synonymous with the ever-onward advance of machine learning. Now researchers at a number of institutions — including the University at Albany — are teaming with Facebook, Microsoft and the Partnership on AI to enhance DeepFake detection capabilities, and combat the fake video-producing technology on a global level.

In launching the “DeepFake Detection Challenge,” Facebook hopes to develop new ways of detecting and preventing AI-manipulated media from being used to mislead others.

UAlbany Professor of Computer Science Siwei Lyu is at the forefront of DeepFake detection. He has developed algorithms that has helped expose the fake videos by tracking the differences in eye movement of bogus content when compared with legitimate sources. On Sept. 26, Lyu will speak before the U.S. House of Representatives at a hearing on “Online Imposters and Disinformation.” The panel is being organized by the Subcommittee on Investigations & Oversight of the House Committee on Science, Space and Technology. You can stream the hearing, which begins at 2 p.m.

Lyu’s research team at the College of Engineering and Applied Sciences (CEAS) will be a key part of the partnership, of which Facebook is dedicated more $10 million to generate an industry-wide campaign.

“Deepfake techniques, which present realistic AI-generated videos of real people doing and saying fictional things, have significant implications for determining the legitimacy of information presented online. Yet the industry doesn't have a great data set or benchmark for detecting them. We want to catalyze more research and development in this area and ensure that there are better open source tools to detect DeepFakes,” writes Mike Schroepfer, Chief Technology Officer at Facebook, in announcing the challenge.

“Although DeepFakes may look realistic, the fact that they are generated from an algorithm instead of real events captured by camera means they can still be detected and their provenance verified,” said Lyu in providing invited commentary for Facebook’s announcement. “Several promising new methods for spotting and mitigating the harmful effects of deepfakes are coming on stream, including procedures for adding ‘digital fingerprints’ to video footage to help verify its authenticity. As with any complex problem, it needs a joint effort from the technical community, government agencies, media, platform companies, and every online users to combat their negative impact.”

“The current arms race between those who seek to deceive entire populations and those trying to stop them poses serious implications for societal stability, domestic and international policy, public safety, international economics, and the proper functioning of democratic governance around the world,” said CEAS Dean Kim L. Boyer. “The work of Professor Lyu and the other participants in the DeepFake Detection Challenge is critically important to meeting this threat and ensuring the availability of trusted, authoritative news sources. This work exemplifies the college’s mission: ‘Science in Service to Society.’”

Lyu will be joined in this effort by researchers from Cornell Tech, MIT, University of Oxford, UC Berkeley and the University of Maryland, College Park.

The lead researcher at Berkeley is Professor Hany Farid, M.S. ’92, who served as Lyu’s doctoral advisor while he was pursuing his Ph.D. from Dartmouth College.

“In order to move from the information age to the knowledge age, we must do better in distinguishing the real from the fake, reward trusted content over untrusted content, and educate the next generation to be better digital citizens,” said Farid, now a professor in the Department of Electrical Engineering & Computer Science at the School of Information at UC Berkeley. “This will require investments across the board, including in industry/university/NGO research efforts to develop and operationalize technology that can quickly and accurately determine which content is authentic.”

In summarizing the effort, Schroepfer addressed the difficulty researchers face in combating the spread of the malicious content, which has been used to create fake news stories, and manipulate pornographic content to make it appear as though it features Hollywood celebrities.

“This is a constantly evolving problem, much like spam or other adversarial challenges, and our hope is that by helping the industry and AI community come together we can make faster progress,” continued Schroepfer.